Comparing Epsilon Greedy and Thompson Sampling model for Multi-Armed Bandit algorithm on Marketing Dataset

Abstract

A/B checking is a regular measure in many marketing procedures for e-Commerce companies. Through well-designed A/B research, advertisers can gain insight about when and how marketing efforts can be maximized and active promotions driven. Whilst many algorithms for the problem are theoretically well developed, empirical confirmation is typically restricted. In practical terms, standard A/B experimentation makes less money relative to more advanced machine learning methods. This paper presents a thorough empirical study of the most popular multi-strategy algorithms. Three important observations can be made from our results. First, simple heuristics such as Epsilon Greedy and Thompson Sampling outperform theoretically sound algorithms in most settings by a significant margin. In this report, the state of A/B testing is addressed, some typical A/B learning algorithms (Multi-Arms Bandits) used to optimize A/B testing are described and comparable. We found that Epsilon Greedy, be an exceptional winner to optimize payouts in this situation.

Article Metrics

Abstract: 1182 Viewers PDF: 696 ViewersKeywords

machine learning;Multi-Arms Bandits;marketing

Full Text:

PDF

DOI:

https://doi.org/10.47738/jads.v2i2.28

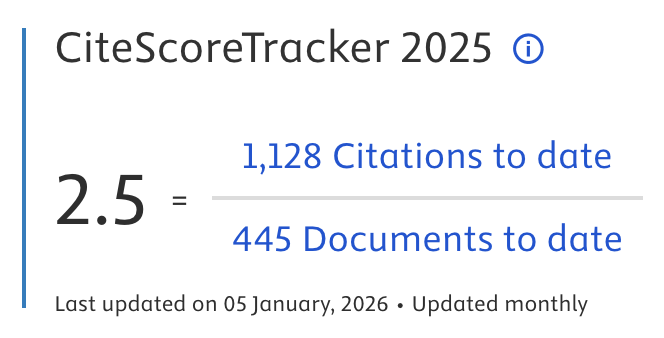

Citation Analysis:

Refbacks

- There are currently no refbacks.

Journal of Applied Data Sciences

| ISSN | : | 2723-6471 (Online) |

| Collaborated with | : | Computer Science and Systems Information Technology, King Abdulaziz University, Kingdom of Saudi Arabia. |

| Publisher | : | Bright Publisher |

| Website | : | http://bright-journal.org/JADS |

| : | taqwa@amikompurwokerto.ac.id (principal contact) | |

| support@bright-journal.org (technical issues) |

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0

.png)