Leveraging K-Nearest Neighbors with SMOTE and Boosting Techniques for Data Imbalance and Accuracy Improvement

Abstract

This research addresses the issue of low accuracy in sentiment analysis on Israeli products on social media, initially achieving only 64% using the K-NN algorithm. Given the ongoing Israeli-Palestinian conflict, which has garnered widespread international attention and strong opinions, understanding public sentiment towards Israeli products is crucial. To improve accuracy, the study employs SMOTE to handle data imbalance and combines K-NN with boosting algorithms like AdaBoost and XGBoost, which were selected for their effectiveness in improving model performance on imbalanced and complex datasets. AdaBoost was chosen for its ability to enhance model accuracy by focusing on misclassified instances, while XGBoost was selected for its efficiency and robustness in handling large datasets with multiple features. The research process includes data pre-processing (cleaning, normalization, tokenization, stopwords removal, and stemming), labeling using a Lexicon-Based approach, and feature extraction with CountVectorizer and TF-IDF. SMOTE was applied to oversample the minority class to match the number of instances in the majority class, ensuring balanced representation before model training. A total of 1,145 datasets were divided into training and testing data with a ratio of 70:30. Results demonstrate that SMOTE increased K-NN accuracy to 77%. Interestingly, combining K-NN with AdaBoost after SMOTE achieved 72% accuracy, which, although lower than the 77% achieved with SMOTE alone, was higher than the 68% accuracy without SMOTE. This discrepancy can be attributed to the added complexity introduced by AdaBoost, which may not synergize as effectively with SMOTE as XGBoost does, particularly in this dataset's context. In contrast, K-NN with XGBoost after SMOTE reached the highest accuracy of 88%, demonstrating a more effective combination. Boosting without SMOTE resulted in lower accuracies: 68% for KNN+AdaBoost and 64% for KNN+XGBoost. The combination of K-NN with SMOTE and XGBoost significantly improves model accuracy and reliability for sentiment analysis on social media.

Article Metrics

Abstract: 772 Viewers PDF: 598 ViewersKeywords

K-NN, XGBoost, AdaBoost, SMOTE, Machine Learning

Full Text:

PDF

DOI:

https://doi.org/10.47738/jads.v5i4.343

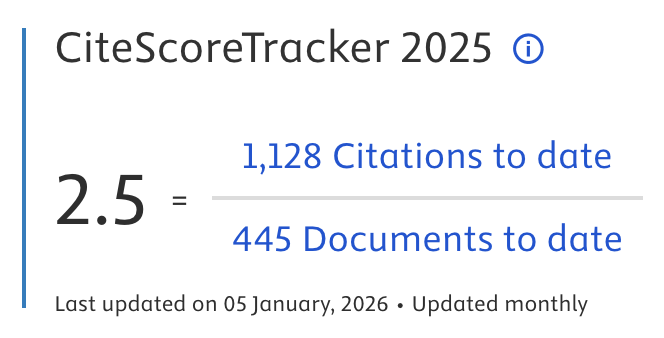

Citation Analysis:

Refbacks

- There are currently no refbacks.

Journal of Applied Data Sciences

| ISSN | : | 2723-6471 (Online) |

| Collaborated with | : | Computer Science and Systems Information Technology, King Abdulaziz University, Kingdom of Saudi Arabia. |

| Publisher | : | Bright Publisher |

| Website | : | http://bright-journal.org/JADS |

| : | taqwa@amikompurwokerto.ac.id (principal contact) | |

| support@bright-journal.org (technical issues) |

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0

.png)