Optimizing Function-Level Source Code Classification Using Meta-Trained CodeBERT in Low-Resource Settings

Abstract

This study investigates the effectiveness of a meta-trained transformer-based model, CodeBERT, for classifying source code functions in environments with limited labeled data. The primary objective is to improve the accuracy and generalizability of function-level code classification using few-shot learning, a strategy where the model learns from only a few labeled examples per category. We introduce a meta-learning framework designed to enable CodeBERT to adapt to new function types with minimal supervision, addressing a common limitation in traditional code classification methods that require extensive labeled datasets and manual feature engineering. The methodology involves episodic few-shot classification, where each episode simulates a low-resource task using five labeled and five unlabeled samples per function class. A balanced subset of Python functions was sampled from the CodeXGLUE benchmark, consisting of ten function categories with equal representation. The source code was preprocessed by removing comments and docstrings, then tokenized into a fixed length of 128 tokens to fit the model input format. The meta-trained CodeBERT was evaluated across 10 episodes, each representing a different task composition. Results show that the model achieves an average classification accuracy of 73.0%, with high accuracy on function categories characterized by unique syntax patterns, and lower performance on categories with overlapping logic or naming structures. Despite this variability, the model-maintained accuracy above 60% in all episodes. These findings suggest that meta-learning significantly enhances the adaptability of CodeBERT to unseen tasks under data-constrained conditions. This research demonstrates that meta-trained transformer models can serve as practical tools for real-time code analysis, particularly in integrated development environments and continuous integration pipelines. Future work may include extending the framework to other programming languages and incorporating semantic code representations to further reduce classification ambiguity.

Article Metrics

Abstract: 396 Viewers PDF: 267 ViewersKeywords

Meta-Learning; Few-Shot Learning; Code Classification; CodeBERT; Transformer Models; Low-Resource Software Engineering; Software Process

Full Text:

PDF

DOI:

https://doi.org/10.47738/jads.v6i3.902

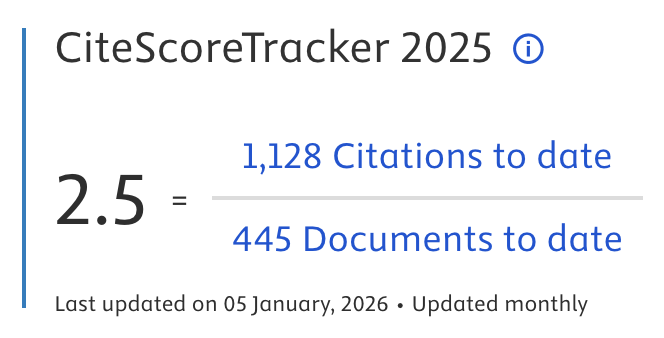

Citation Analysis:

Refbacks

- There are currently no refbacks.

Journal of Applied Data Sciences

| ISSN | : | 2723-6471 (Online) |

| Collaborated with | : | Computer Science and Systems Information Technology, King Abdulaziz University, Kingdom of Saudi Arabia. |

| Publisher | : | Bright Publisher |

| Website | : | http://bright-journal.org/JADS |

| : | taqwa@amikompurwokerto.ac.id (principal contact) | |

| support@bright-journal.org (technical issues) |

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0

.png)